Introduction: Why AI Models Are No Longer the Product

If you look at how most organisations talk about AI, the focus is almost always on the model: which one to choose, how accurate it is, or whether it should be built in-house or accessed via an API.

But this framing is increasingly outdated.

In practice, AI models commoditise quickly. New architectures emerge, APIs improve, costs fall, and yesterday’s differentiator becomes today’s baseline. What does not commoditise — and rarely receives enough attention — is the system that feeds, shapes, governs, and sustains those models.

That system is the AI data pipeline.

In modern AI products, data pipelines are not plumbing. They define what the model can see, how fresh its inputs are, how errors are detected, and how trust is maintained over time. In many cases, they are the product.

This article argues that AI data pipelines are the real source of long-term value, and that engineering leaders who treat them as first-class products build more resilient, trustworthy, and scalable AI systems.

1. Models Commoditise — Pipelines Compound

The last few years have made one thing clear: access to powerful models is no longer scarce.

Foundation models, open-source alternatives, and managed APIs have lowered the barrier to entry dramatically. Two teams can start with the same model and produce radically different outcomes — not because of modelling brilliance, but because of data quality and system design.

Data pipelines compound value because they:

Encode organisational knowledge

Improve with usage and feedback

Create switching costs

Enable faster iteration with lower risk

While models can be swapped, pipelines accumulate context — about customers, operations, edge cases, and historical behaviour. Over time, this context becomes extremely difficult for competitors to replicate.

This is why AI maturity is less about “which model are you using?” and more about “how reliably does your system turn data into decisions?”

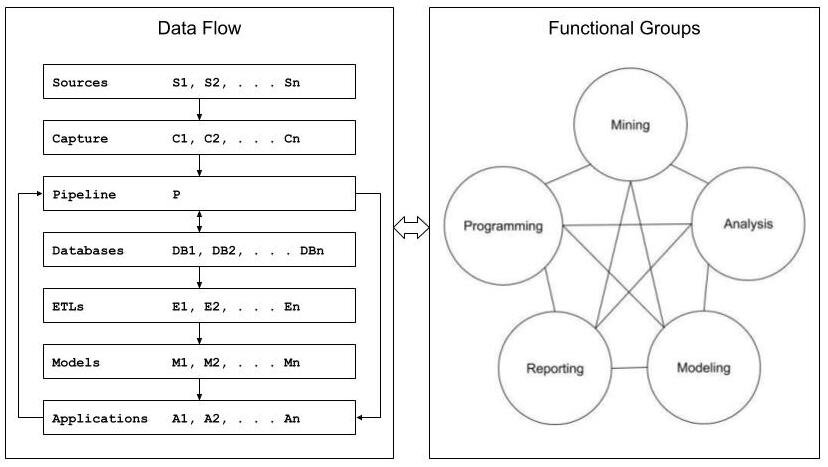

2. What an AI Data Pipeline Really Includes

When teams hear “data pipeline,” they often think narrowly: ingestion, transformation, storage.

In AI systems, pipelines are broader and more interconnected. A production-grade AI data pipeline typically includes:

Data ingestion (batch and real-time)

Feature engineering logic

Feature stores shared across models

Freshness and latency guarantees

Training–serving consistency

Monitoring and drift detection

Auditability and lineage

Access control and ownership

Crucially, these elements operate across the entire lifecycle of an AI system, not just training.

Once you see pipelines this way, it becomes clear why many AI initiatives stall: teams optimise models in isolation while the surrounding system quietly erodes reliability.

3. Feature Engineering Systems: Where Value Is Actually Created

Feature engineering is often treated as a preparatory step — something you do before “real” AI work begins. In reality, it is where much of the product logic lives.

Well-designed feature engineering systems:

Encode business assumptions

Standardise definitions across teams

Prevent duplicated logic

Enable faster experimentation without rework

Feature stores are a natural evolution here. They shift features from being ad-hoc artefacts to shared, governed assets. This reduces inconsistencies between training and inference while increasing organisational leverage.

The strategic insight is simple:

Your features represent how your organisation understands the world.

Treating them as disposable scripts rather than durable products is an architectural mistake.

4. Freshness, Latency, and the Cost of Stale Intelligence

One of the most common failure modes in production AI systems is not incorrect predictions — it is irrelevant ones.

Data freshness matters because AI systems operate in dynamic environments. Customer behaviour changes. Supply chains shift. Risk profiles evolve. If your pipeline cannot deliver timely signals, even a highly accurate model becomes misleading.

Engineering leaders should ask:

What is the acceptable staleness for this decision?

Where does latency accumulate in the pipeline?

How do we detect silent degradation?

Designing for freshness is not just a performance concern — it is a product decision with ethical and operational implications.

5. Ownership and Governance: Pipelines as Control Surfaces

As AI systems influence more decisions, questions of ownership and accountability become unavoidable.

Data pipelines are where governance becomes operational. They determine:

Who can introduce new data sources

How changes are reviewed and deployed

What is logged and retained

How decisions can be audited after the fact

This is why governance that exists only in policy documents rarely works. Without enforcement in pipelines, it remains aspirational.

Embedding governance into AI data pipelines allows organisations to scale responsibly without slowing innovation — a balance many leaders assume is impossible.

6. Pipelines as Products, Not Projects

A recurring mistake in AI programmes is treating pipelines as one-off delivery artefacts.

In reality, pipelines have:

Users (data scientists, engineers, analysts)

SLAs (freshness, reliability, accuracy impact)

Roadmaps (new features, optimisations)

Technical debt (just like any product)

When pipelines are productised, teams invest in:

Documentation and discoverability

Observability and alerts

Backwards compatibility

Intentional evolution

This shift in mindset is subtle but powerful. It moves AI from experimentation to infrastructure.

7. The Strategic Payoff: Why Pipelines Create Competitive Advantage

From a leadership perspective, the question is not whether to invest in pipelines — but whether to own them.

Strong AI data pipelines enable:

Faster deployment of new models

Lower marginal cost per AI use case

Safer experimentation

Regulatory resilience

Organisational learning at scale

In contrast, organisations that outsource or neglect their pipelines remain dependent on vendors and vulnerable to disruption.

In the long run, pipelines are the moat.

Conclusion: Build the System, Not Just the Model

As AI becomes embedded across products and operations, success will belong to organisations that understand a simple truth:

Models are replaceable. Pipelines are not.

Treating AI data pipelines as first-class products — designed, governed, and evolved deliberately — is what separates experimental AI from enduring capability.

If models are the visible tip of the iceberg, pipelines are the structure beneath the surface. Ignore them, and the system eventually collapses. Invest in them, and AI becomes a compounding asset rather than a recurring disappointment.

FAQs

1. What are AI data pipelines?

AI data pipelines are systems that ingest, transform, store, and serve data to AI models across training and inference, including monitoring and governance layers.

2. Why are data pipelines more important than AI models?

Models commoditise quickly, while pipelines encode organisational knowledge, ensure reliability, and compound value over time.

3. What is the role of feature stores in AI pipelines?

Feature stores standardise and reuse features across models, ensuring consistency, governance, and faster experimentation.

4. How do data pipelines support AI governance?

They operationalise governance by enforcing access controls, logging decisions, enabling audits, and managing data lineage.

5. Should AI data pipelines be treated as products?

Yes. Treating pipelines as products improves reliability, usability, and long-term scalability of AI systems.