Introduction

For many organisations, Artificial Intelligence is becoming embedded in core decision-making, customer experiences, and operational processes. Yet while AI investment continues to accelerate, many leaders still struggle with a fundamental question:

How do we know whether our AI transformation is actually successful — and responsible?

Traditional digital transformation metrics focus heavily on delivery milestones, cost savings, or productivity gains. AI, however, introduces new challenges: probabilistic outputs, evolving models, ethical risk, regulatory scrutiny, and deep socio-technical change. Measuring success purely through financial or operational KPIs is no longer sufficient.

This article proposes a more robust answer: successful AI transformation must be measured across both performance and responsibility. Organisations that fail to do so may scale systems that are brittle, untrustworthy, or misaligned with societal and regulatory expectations.

Why AI Transformation Demands New Metrics

Unlike deterministic IT systems, AI systems learn from data, adapt over time, and influence human decisions. This creates three implications for measurement:

Outcomes are probabilistic, not guaranteed

Risks evolve post-deployment, not just at launch

Value and harm can scale simultaneously

As highlighted in research from World Economic Forum and MIT Sloan, organisations that succeed with AI treat governance and ethics as enablers of scale, not constraints on innovation.

This requires moving beyond narrow success indicators toward a balanced scorecard for responsible AI transformation.

The Five Dimensions of Responsible AI Success

Based on your readiness and capability notes, AI transformation success can be assessed across five interdependent dimensions.

1. Strategic Alignment Metrics

Are we solving the right problems — responsibly?

AI initiatives frequently fail not due to poor models, but due to weak strategic fit. Responsible AI starts with problem selection, not model selection.

Key metrics include:

- Percentage of AI use cases explicitly linked to strategic objectives

- Presence of documented AI value hypotheses (business + societal impact)

- Executive sponsorship and budget continuity across AI initiatives

- Alignment with corporate risk appetite and values

2. Data Readiness & Integrity Metrics

Is the data fit for both performance and fairness?

Data is the most common failure point in AI transformation — and the most underestimated ethical risk.

Operational metrics:

- Data completeness, accuracy, and timeliness

- Reduction of siloed or duplicated datasets

- Stability and automation of data pipelines

Responsible AI metrics:

- Coverage of bias and representativeness audits

- Percentage of datasets with documented provenance and consent

- Governance controls for sensitive or regulated data

High-performing organisations treat data governance as continuous infrastructure, not a compliance checkbox.

This aligns closely with emerging expectations under the European Union’s AI Act, where data quality and traceability are central risk controls.

A critical governance signal is whether AI investments are prioritised based on business impact and ethical risk, rather than technical novelty.

Responsible AI indicator:

High-impact, high-risk AI systems receive more oversight — not less urgency.

3. Model Lifecycle & Oversight Metrics

Can we see, control, and correct our AI systems over time?

AI success is not deployment — it is sustained performance under changing conditions.

Key lifecycle metrics include:

Model performance drift over time

Frequency of retraining and validation cycles

Human override or escalation rates

Time to detect and correct harmful or degraded outputs

Responsible AI metrics:

Explainability thresholds for high-impact decisions

Auditability of model decisions and logs

Clear ownership for post-deployment accountability

Organisations that cannot monitor models in production effectively cannot govern them ethically.

4. People, Skills & Cultural Metrics

Do people trust, understand, and improve the system?

AI transformation is ultimately a workforce transformation. Cultural resistance, fear, or misunderstanding can undermine even technically excellent systems.

Capability metrics:

AI literacy levels across non-technical roles

Cross-functional participation in AI projects

Uptake of AI tools in day-to-day decision-making

Responsible AI indicators:

Employee confidence in challenging AI outputs

Transparency around how AI affects roles and decisions

Existence of safe feedback and escalation channels

As noted in guidance from OECD, responsible AI requires human agency, not blind automation.

5. Governance, Trust & Accountability Metrics

Can the organisation justify its AI decisions — internally and externally?

This is where many transformation metrics stop — and where responsible AI begins.

Governance metrics include:

Clear classification of AI systems by risk level

Defined accountability for AI decisions

Regular ethical and regulatory reviews

Trust metrics (often overlooked):

Customer trust and adoption rates

Complaint or challenge frequency linked to AI decisions

Regulator or auditor engagement outcomes

Organisations that embed governance early move faster over time because they reduce rework, reputational damage, and regulatory shock.

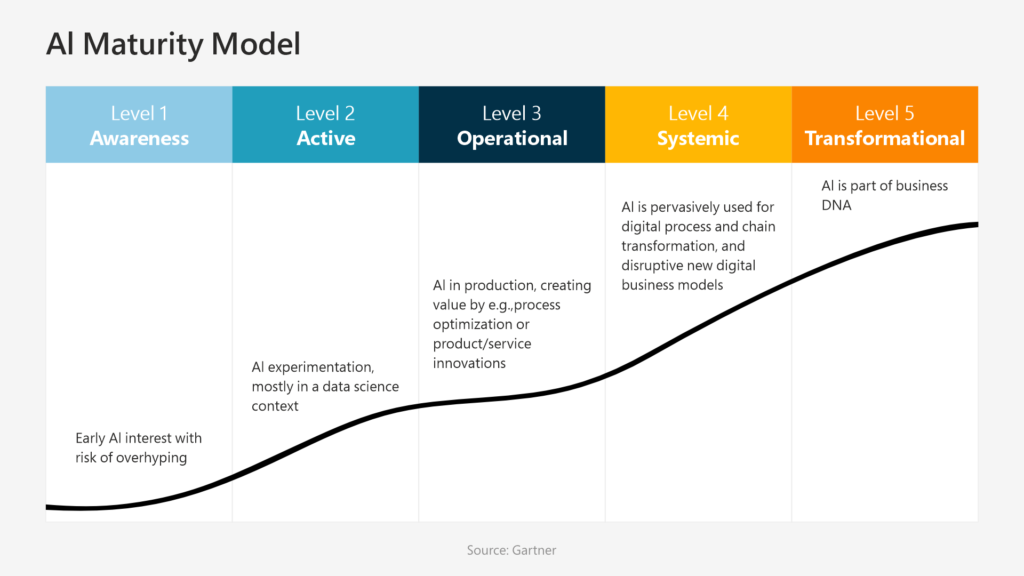

From Metrics to Maturity

These metrics evolve as organisations progress through AI maturity stages:

Explorative: Technical feasibility, basic risk awareness

Formalised: Performance tracking, governance frameworks

Embedded: Continuous monitoring, ethical assurance

Transformational: AI shapes strategy, standards, and ecosystem norms

The most mature organisations treat responsible AI metrics as leading indicators, not lagging controls.

What Leaders Should Take Away

A successful AI transformation is not defined by how many models you deploy — but by:

Whether AI decisions can be explained, challenged, and improved

Whether value creation is matched by risk stewardship

Whether trust compounds over time rather than eroding silently

Responsible AI is not the opposite of performance. It is the condition for sustainable performance at scale.

Conclusion: Measuring What Actually Matters

AI transformation forces leaders to rethink what “success” means. In a world of adaptive systems and heightened scrutiny, ethics, governance, and accountability are no longer optional extras — they are core operating metrics.

Organisations that measure only speed and savings may move fast, but they rarely move far. Those that measure value, trust, and responsibility together build AI systems that last.

FAQs

1. Is responsible AI measurable?

Yes. Metrics spanning data quality, governance coverage, explainability, trust, and accountability make responsibility observable and actionable.

2. Does responsible AI slow innovation?

In practice, it accelerates sustainable scale by reducing downstream risk, rework, and resistance.

3. Who owns AI governance metrics?

Shared ownership across leadership, product, data, legal, and ethics functions is essential.